It’s all about domains… | Giovane Moura (SIDN Labs)

Scholarly insights meet real-world DNS challenges. Let's explore the hidden corners of the DNS labyrinth, shedding light on our paths with pioneering research and precise measurements, one node at a time.

Published by

Simone Catania

Date

In technology, the science behind operations is rooted in academic settings. Breakthrough developments aren’t just theorized there. They are tested, adopted and eventually shared with the general audience. DNS is no exception. Today, we’re thrilled to be talking with an expert who combines two realms that often seem to be worlds apart: the technical DNS industry and the academic sphere.

It’s a pleasure to introduce Giovane Moura, a data scientist at SIDN Labs and an assistant professor specializing in cybersecurity at Delft University of Technology (TU Delft). Giovane works with SIDN Labs, the research hub of SIDN, the registry operator of .nl, the ccTLD of the Netherlands. Giovane finds himself at the frontline of DNS operations advancements but doesn’t stop there. In the halls of TU Delft, one of the leading universities in technology worldwide, he rigorously applies academic principles to the security, performance and stability of networked systems.

Our discussion will take a deep dive into Giovane’s wide-ranging research, covering critical areas like Anycast, DNS engineering, DDoS attacks and DNS security – topics in which he is steeped in knowledge. We’ll engage with the academic perspective on DNS, uncovering important insights on security concerns and vulnerabilities. You should keep your notebook at hand because Giovane will also drop some invaluable DNS security tips, informed by years of research and practical expertise.

Brace yourselves for an engaging conversation with Giovane Moura in which academia meets DNS industry practice!

1 Your name can be found in prestigious academic publications and research work related to the DNS. What is your current role within the domain industry?

I am a data scientist at SIDN Labs, the research team of SIDN, the registry operator of the ccTLD .nl for the Netherlands. I’m also an assistant professor (part-time) at TU Delft. In addition, I supervise students at TU Delft and facilitate access to our data sets, enabling them to work towards solving real-world challenges. Consequently, they gain valuable industry experience with an authentic network operator.

I have a secondment where I work one day a week at TU Delft, sponsored by SIDN. It’s a win-win arrangement: We work to improve and safeguard the .nl zone but also work on projects and papers that will enhance the DNS’s resilience or security, contributing to the general internet community.

2 What sparked your interest and motivated you to move into DNS research and what are your current projects analyzing?

During my PhD, I studied DNS data and internet measurements. When I began working at SIDN Labs, I got access to lots of DNS data, which allowed me to engage in some really cool studies. My research efforts primarily focus on enhancing specific aspects of networks, namely, improving their security, optimizing their performance and ensuring their correctness.

I’m currently interested in pishing activities in the .nl zone and other TLD registries. I want to understand how malicious actors operate, who they target and how we can better detect their movements. I’m also fascinated by the “infrastructural side” of the DNS. For example, in the recent RFC9199, we undertook studies and advised on how to run authoritative DNS servers faster and more robustly based on recent wide-scale internet measurement research.

3 What is the present state of research in the field of DNS? Are there any current hot topics?

DNS is a 26-year-old protocol that has continued evolving since its inception. It includes a suite of protocols, a distributed database system and a client-server architecture at its core. The system is integrated with extensive routing methodologies, particularly Anycast, and encompasses a significant focus on security aspects such as phishing and spam prevention. Governance is another crucial facet of DNS.

With over 2000 pages of insightful documentation, often referred to as the DNS camel, it’s fair to say that DNS remains a bustling research field across all of these fronts.

- Regarding DNSSEC, a considerable amount of research is being conducted in the context of post-quantum computing. The key question emerging in this field is: What implications will the advent of quantum computers, capable of quickly deciphering most DNSSEC algorithms, have for the security integrity of the DNS infrastructure?

- Within the authoritative side, experts are diligently focusing on the automatic deployment of Anycast services to meet the demands of growing service needs effectively.

- Regarding zone contents, there’s an ongoing, complex dance akin to a cat-mouse game concerning abusive domains. As soon as a malicious pattern is identified, offenders usually modify their strategy, instigating a continuous cycle of challenge and response.

- In recent years, numerous standards have emerged focusing on encrypting DNS traffic. Notably, DNSSEC primarily safeguards integrity but doesn’t address confidentiality. Consequently, we now have new IETF standards like DNS over TLS (DoT), DNS over HTTPS (DoH), and DNS over Quic (DoQ) to tackle confidentiality issues. While these are formally established standards, their actual adoption presents an entirely different challenge. Therefore, we need comprehensive measurement studies to shed light on the performance and prevalence of these protocols in contemporary networks.

- DNS centralization is another issue that academia and the DNS industry focus on. We are witnessing a significant surge in traffic gravitating towards large cloud service providers. This trend concentrates power and control in the hands of these top providers and potentially compromises the resilience of the internet infrastructure. It is a concern that warrants attention from all DNS stakeholders to ensure a fair and stable digital environment.

4 At the end of 2021, you disclosed the tsuNAME vulnerability. What does this DNS security flaw entail?

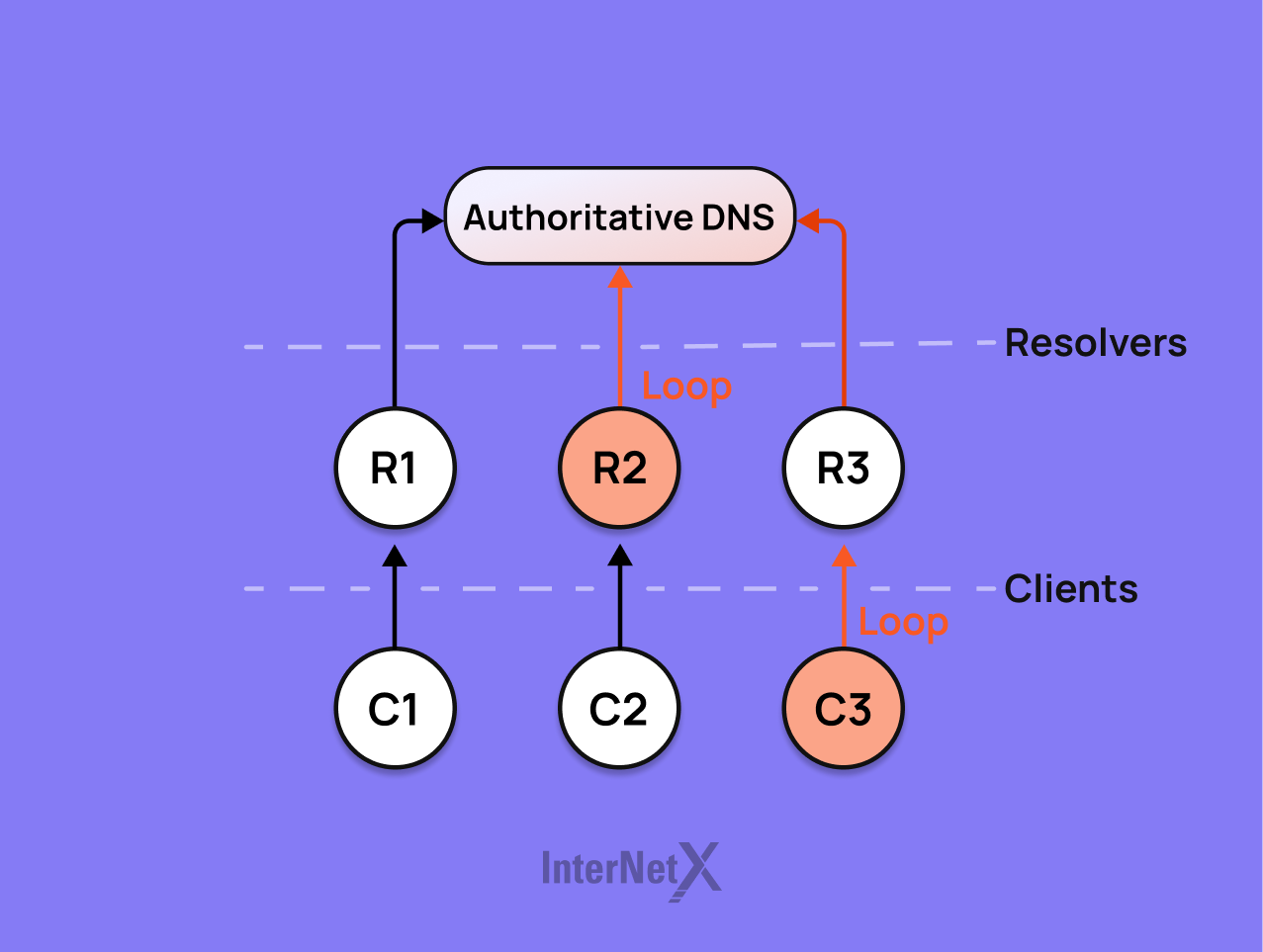

The vulnerability, which we named tsuNAME, involved clients and recursive resolvers endlessly sending queries to authoritative servers. If a record X points to Y, and Y points back to X, it creates a loop. For this looping behavior to begin, a resolver or client must identify a DNS zone loop within zone files located on different servers. Upon detecting this, some resolvers won’t simply stop querying: They will instead start looping and sending queries non-stop, hoping each query will return a different answer. When exploited deliberately, this issue could overwhelm and incapacitate authoritative servers. As a result, it would make entire DNS zones inaccessible.

At its first stage, our research showed that the ccTLD .nz suffered a 50% traffic increase because of its vulnerability and that Google Public DNS (GDNS) and Cisco Open DNS could be steered to send many DNS queries to authoritative DNS servers this way, which caused a 10-fold traffic increase for an EU-based operator.

The tsuNAME disclosure process encompassed early notification to domain operators, managing stressful responses, navigating vendor responsiveness and navigating through an eight-month path from the initial discovery to a final public disclosure. To prevent tsuNAME from being used for DDoS, we worked closely with vendors and operators and Google and OpenDNS promptly fixed their software.

In the meantime, the RFC 9520 published in December 2023 proposes that resolvers cache such cycling records so they no longer loop.

5 What technical strategies can DNS operators and developers employ to bolster the defenses of DNS against DDoS attacks?

Implementing replication is crucial to defending DNS against DDoS attacks. This means setting up multiple authoritative servers and using IP Anycast within these servers, enabling them to be announced from various locations – a technique often called over-provisioning. Over-provisioning via IP Anycast provides a robust defense against DDoS attacks. By utilizing multiple authoritative servers, traffic can be evenly distributed, reducing the risk of any single server becoming overwhelmed. Additionally, the ability to announce these servers from multiple geographical locations enhances redundancy and resilience, ensuring continuous availability and performance, even if an attack targets some servers. Ultimately, this technique improves the capacity of DNS infrastructure to absorb large volumes of traffic and mitigate the potential impacts of DDoS attacks.

The Border Gateway Protocol (BGP) is a powerful defense against DDoS attacks which enables authoritative operators to control network traffic strategically. By adjusting BGP configurations, operators can lead malicious traffic away from target servers and towards locations with the capacity to handle it or even to a sinkhole that absorbs and neutralizes the attack. We outlined this step in RFC9199. This ability to dynamically reroute traffic protects the DNS infrastructure and minimizes service disruptions during DDoS events.

Additionally, assigning long Time To Live (TTL) values to records can be protective. The TTL value in DNS records can aid in mitigating DDoS attacks through its control over how often DNS information is updated. A shorter TTL provides more regular updates, reducing the window of opportunity for attackers to redirect traffic to illegitimate servers. Conversely, a longer TTL can be set once a threat has passed to reduce unnecessary network load. Thus, properly managing TTL values provides a dynamic mechanism for responding to and recovering from DDoS attacks.

These strategies shield user operators from large-scale DDoS attacks and offer resilience similar to that observed in root DNS servers.

Protect from DDoS now6 How can authoritative DNS server operators enhance their service offerings? Are there any specific factors they should consider or best practices they should adopt?

If authoritative DNS server operators aim to minimize latency, the initial step would be to gauge latency from their clients using DNS over TCP traffic. This method enables the identification of clients experiencing poor performance, creating an opportunity to optimize their experience. Moreover, this approach is cost-effective and simple to implement. For resilience, then we are talking about replication. Anycast is an option for large operators, but it has associated costs.

What is an authoritative DNS server operator?

An authoritative DNS server operator manages the servers containing authoritative information about domain names within a specific zone. These operators are responsible for providing accurate DNS name resolution for their domains. As the definitive source of domain information, authoritative DNS servers ensure that clients receive the correct IP addresses when accessing websites, thereby easing internet navigation.

Key improvement factors for authoritative DNS server operators

- Reducing latency: Implementing measures such as GeoDNS, Edge DNS servers or CDNs to improve response times.

- Resiliency: Building redundancies, like deploying multiple servers or employing IP Anycast, to protect against potential failures or attacks.

- Security: Implementing DNSSEC and improving DDoS mitigation techniques to ensure the safety of the DNS information.

- Scalability: Design systems to handle growth and increased traffic demand effectively without sacrificing performance.

- Monitor & analysis: Monitor server performance, latency and traffic patterns; analyze logs for anomalies or issues needing attention.

- DNS record management: Ensure DNS records are updated and accurate; remove old or stale records.

- Backup and recovery: Regularly backup server data and ensure effective recovery systems are in place.

- Optimization: Regularly re-evaluate and update systems, software and technologies for optimal performance.

7 How does the surge in cloud computing and the expanding utilization of microservices influence DNS research and infrastructure? What potential challenges do these trends present?

The dominance of a few key players, in particular big US tech companies, has resulted in a significant concentration of web traffic. Interestingly, this shift has had unexpected benefits. For instance, when Google activated query name minimization on its public DNS, it instantaneously impacted millions of users.

Operating servers on the cloud can offer DNS operators considerable ease as it allows them to deploy new instances as and when traffic demands increase. Yet, we should heed the wise words of Bruce Schneier, reminding us that the cloud is essentially ‘someone else’s computer.’

Research will also have to follow this tendency; the performance of large DNS providers will become increasingly important if this trend continues.

8 How do you see DNS evolving in the next 5-10 years?

Drawing from past trends, we can assert with confidence that DNS will continue to evolve, just as it has consistently done over the past 26 years.

I anticipate the increased use of artificial intelligence (AI) and machine learning (ML) in DNS. Both AI and ML have the potential to enhance the security of DNS by efficiently identifying and blocking malicious traffic. They could also streamline DNS performance by predicatively analyzing user behavior and responsively adjusting DNS responses. For instance, should we initiate a new server in Anycast location X in response to rising traffic? As the costs related to computing and storage continue to decline, implementing ML as a method to bolster both operations and security will become increasingly cheaper.

Furthermore, the DNS community will proactively deploy privacy-preserving standards in the present and future to safeguard users’ data.